Boosting AI Performance: The Power of LLM-Friendly Content in Markdown

March 13, 2025

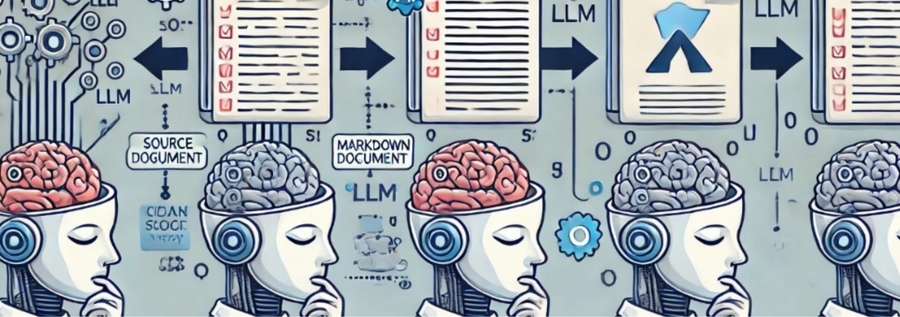

In the era of rapidly advancing artificial intelligence, large language models (LLMs) like GPT have become pivotal tools in content generation, customer support, and various other domains. However, to fully harness the potential of these models, it's crucial to optimize the way content is presented so that the models can accurately interpret and process the content. LLM-friendly content, particularly when structured in markdown, offers significant advantages over more complex formats like JSON or XML. This blog explores the importance of converting content into an LLM-friendly format, emphasizing how markdown can enhance the accuracy and performance of these models. By understanding the benefits of markdown, content creators can optimize their materials for better LLM processing, leading to more reliable and efficient AI-driven outcomes.

Introduction

Large Language Models (LLMs) have become increasingly integral to modern digital ecosystems. From powering chatbots to generating content and assisting in data analysis, LLMs like GPT have revolutionized how businesses and individuals interact with the technology. However, the effectiveness of these models hinges not just on their underlying algorithms but also on the quality and structure of the input they receive. As artificial intelligence (AI) continues to transform various industries, the need for content that is easily interpretable by LLMs has never been more critical. This blog delves into the importance of converting content into an LLM-friendly format, with a particular focus on markdown, and discusses how this practice can significantly enhance the accuracy and performance of LLMs.

What is LLM-Friendly Content?

LLM-friendly content is designed to be easily parsed and understood by language models. Unlike traditional content that may be unstructured or scattered across various formats (like plain text, HTML, or PDFs), this type of content is clear, structured, and devoid of unnecessary complexity that could confuse the model or lead to inaccurate interpretations. The goal is to present information in a way that aligns with the model's processing capabilities, ensuring that it can generate the most accurate and relevant responses possible.

Why Convert Content into LLM-Friendly Formats?

Converting traditional content into LLM-friendly formats offers several key benefits that directly impact the performance and accuracy of LLMs:

- Improved Parsing and Interpretation: When content is presented in a structured format, LLMs can more easily parse and understand the information. For example, clearly defined headings and subheadings allow the model to understand the context of the text better, reducing the likelihood of misinterpretation.

- Enhanced Accuracy: Structured content helps LLMs distinguish between different types of information, such as questions, instructions, or data points. This distinction is crucial for generating accurate and relevant responses. For instance, in a markdown document, a bulleted list is more likely to be recognized as a list of items rather than a single, unconnected paragraph.

- Reduced Ambiguity: Unstructured content can lead to ambiguities in how the LLM processes information. By converting content into a structured and simple format, you minimize the chances of the model getting confused by unclear or poorly organized data. It emphasizes clarity, logical flow, and simplicity.

- Consistency and Reproducibility: Consistent formatting across documents ensures that LLMs receive inputs in a predictable structure. This consistency is essential for reproducibility, especially when generating content or performing tasks that require a high degree of accuracy.

- Facilitates Fine-Tuning and Training: For organizations that fine-tune LLMs on specific datasets, having content in a LLM-friendly format can streamline the training process. The structured nature of the format makes it easier to identify and isolate relevant sections of text for training, leading to more efficient and effective model updates. It helps in N-shot tuning also for majority of the LLMs to accommodate dynamic training examples which resembles their training formats.

Use Cases of LLM-Friendly Content

Here are just a few use cases for LLM friendly content:

- Content Generation: For businesses that rely on LLMs for generating articles, blog posts, or other forms of content, providing well-structured LLM-friendly formatted input ensures that the outputs are coherent and align with the desired tone and format.

- Documentation and Knowledge Bases: In tech companies, where documentation is key, converting content into LLM-friendly format allows LLMs to generate or update technical documentation more accurately, preserving the logical flow and clarity of the original content.

- Customer Support: LLMs used in customer support can provide more accurate and helpful responses if they are trained on structured content. LLM-friendly format, with its clear distinctions between different sections and types of information, ensures that the LLM can access the most relevant data quickly.

Markdown: A Preferred Format for LLMs

Markdown is a lightweight markup language that has gained popularity for its simplicity and readability. Originally created to be an easy-to-write and easy-to-read format for text, markdown has become an ideal choice for creating LLM-friendly content. The primary advantage of markdown is its straightforward syntax, which makes it easy for both humans and machines to parse. Unlike more complex formats like JSON or XML, which are designed for data interchange between systems, markdown is designed for readability and minimalism. This simplicity is key to making content LLM-friendly. Markdown allows content creators to structure their text with headers, lists, links, and emphasis without adding the complexity of nested tags or attributes that could potentially confuse an LLM.

For example, in markdown, a heading is simply written as:

# This is a Heading

This is much easier for an LLM to interpret than a comparable XML structure:

<heading level="1">This is a Heading</heading>

The former is not only easier to write and read but also less prone to errors during processing, making it a more reliable choice for LLMs.

Advantages of Markdown over JSON or XML for LLMs

While JSON and XML are powerful tools for data interchange, they are not inherently designed for readability or simplicity. These formats often include nested structures, attributes, and tags that can add unnecessary complexity when the goal is simply to present content for interpretation by an LLM (for e.g. simple reasoning, generative use cases, chat interactions etc.):

- Readability and Simplicity: Markdown's simplicity ensures that the content is easy to read and understand, not just for humans but also for LLMs. The absence of nested tags and complex structures means that the model can focus on the content itself rather than getting bogged down by extraneous formatting information. The hierarchical nature of markdown formatting (e.g., headers and sub headers) allows LLMs to discern the logical flow of information, making it easier to follow and interpret.

- Reduced Processing Overhead: When processing JSON or XML, an LLM must first navigate through layers of tags and attributes to extract the actual content. This additional processing step can introduce errors or lead to the model misinterpreting the content. markdown, by contrast, presents the content in a straightforward manner, reducing the cognitive load on the model and improving processing efficiency.

- Alignment with Natural Language: markdown aligns closely with natural language, making it more intuitive for LLMs to parse. The format's emphasis on text and minimal use of symbols helps LLMs maintain context and continuity, which is essential for generating accurate and coherent responses.

- Flexibility and Adaptability: markdown is versatile and can be easily converted to other formats if needed. For instance, markdown can be converted into HTML, PDF, or even JSON with relative ease, making it a flexible choice for content that may need to be repurposed across different platforms. This flexibility also means that content originally written in markdown is more adaptable to various LLM use cases.

LLM-Friendly Content and Retrieval Augmented Generation

In Retrieval-Augmented Generation (RAG) the accuracy and efficiency of LLM outputs are heavily influenced by the quality of the retrieved content. LLM-friendly content, particularly when structured in formats like markdown, ensures that the information is clear, concise, and easily interpretable by the model. This leads to more accurate retrieval and generation processes, as the LLM can better understand and integrate the retrieved content into its responses. By optimizing content for LLMs, RAG systems can produce more reliable and contextually relevant outputs, enhancing the overall effectiveness of AI-driven tasks.

Final Thoughts

In the age of AI, the format in which content is presented significantly impacts how effectively large language models (LLMs) interpret and respond. Both Markdown and XML have their strengths, and the right choice depends on the complexity and structure of the content. Markdown is preferred for its readability, simplicity, and token efficiency. It provides a clear, human-friendly way to structure information using headings, lists, and basic formatting without unnecessary verbosity. This makes it ideal for general LLM-friendly content such as blogs, documentation, FAQs, and structured instructions, as well as for prompt engineering where clarity matters but strict structural enforcement is unnecessary. Since Markdown keeps token usage minimal, it is also beneficial for efficient LLM processing.

XML, on the other hand, is useful when a prompt requires strict sectioning, deep nesting, or explicit structural clarity. With defined tags such as <context>, <instructions>, and <example>, XML ensures that the LLM can precisely distinguish different sections, making it ideal for complex referencing, legal or technical documentation, and multi-part reasoning tasks. Additionally, XML is well-suited for scenarios where post-processing is required, as it allows for easy extraction of structured data using predefined tags. While XML provides better control over structure, its verbosity increases token count, making it less efficient for simpler prompts where Markdown would suffice.

For most applications, Markdown serves as the preferred default due to its balance of clarity, efficiency, and ease of use. However, when dealing with highly structured, interdependent, or nested prompts, XML's explicit demarcation provides better control and precision. The choice ultimately depends on whether human readability and flexibility are the priority or whether structured precision is necessary for parsing and logical organization. Understanding these differences allows creators, developers, and businesses to optimize their content for AI-driven interactions, leading to better outcomes and more accurate insights.